Blog · 5 MIN READ

Energy Robotics Guest Blog–Robot skills: Transforming raw sensor data into actionable information

Posted on September 28

Robot skills: Transforming raw sensor data into actionable information

Note: We are thrilled to present a guest blog by HUVR Partner Network member Energy Robotics. If you’re interested in our guest-blog program, email [email protected]

These are exciting times for robotics. Thanks to rapid innovation, robots can now carry out an ever-growing variety of tasks. This is important: as labor shortages affect more and more industries, increasingly capable robots can step in, helping industrial operations to run safely and efficiently. By using more robots, operators are able to capture more higher-quality data, both in terms of volume and type. But most of our customers aren’t really interested in large amounts of raw data; they want practical insights.

Skills: Turning Raw Data into Actionable Information

Right now, turning data into actionable information still relies heavily on human input and judgment. But as technology evolves, robots are becoming smarter. We can now equip them with different skills – containerized applications that process data captured by a robot’s sensors and enable it to autonomously perform specific tasks. These range from detecting gas leaks and monitoring safety equipment, to reading analog devices or tracking human movement on site.

An Example: Reading Analog Devices

To understand how exactly a Skill delivers actionable information, let’s take the example of reading analog gauges. Robots equipped with this skill are able to detect the needle position of analog devices with precision and record the reading accurately. The robot also sends out an alarm if the reading is above or below a pre-defined threshold value. To top it off, the Skill even influences robot behaviour such that it captures a clear image of the device devoid of any distortions that could be caused due to elements such rain and sunlight. This is how, raw data in the form of a picture of an analog device is reliably collected and transformed into actionable information through the power of machine vision and AI.

Skill Store: A versatile open-source platform for robot skills

To ensure our customers have access to a wide selection of solutions to their challenges, we’re setting up our own Skill Store.

Similar to the app store on your smartphone, this is a versatile, open-source platform that brings together customers and third-party developers. For our customers, it gives them access to a variety of skills – all they need to do is select what they want and add it to their robots at the click of a button. This enables users to transfer skills to an entire fleet of varying robot types instead of training them individually to perform specific tasks.

We’re developing some of these solutions in house, some of which combine several individual skills. Take the man-down detector: When a robot sees someone on the ground, it sounds an alert, before waiting for confirmation as to whether the human is injured. If necessary, it can then find the nearest location with available network and call for help. The majority of skills, however, will be developed by external experts – absolute specialists in their respective fields of computer vision and AI. This will really help accelerate the overall development of robot skills.

To give customers as much freedom as possible, we’re also integrating AWS Marketplace into the Skill Store for greater access to third-party computer vision and AI applications. Crucially, it also gives customers hardware independence so there’s no vendor lock-in. And for developers, it’s a great way to market and monetise their work. Our sandbox provides the perfect testing environment and the Skill Store lets them add a lucrative revenue channel to their services as well as opening up access to some real industry giants.

Instant value without the need for robotics expertise

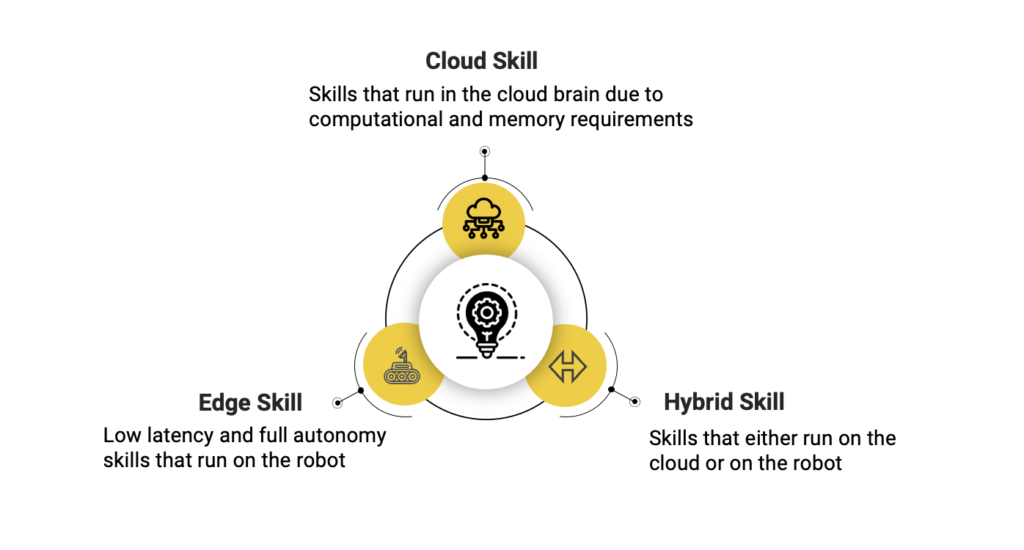

Let’s take a closer look at the types of skills available and how they function. Essentially, skills are containerised applications that process sensor data and influence robot behavior to perform specific tasks. Depending on the robot and the skill these will either be deployed in the cloud or on the robots themselves. Here are some examples:

Edge skills

These are vital for monitoring critical aspects of an industrial plant and run autonomously on the robot itself to immediately change its behaviour. Take manometer reading, for example. Rain, dirt or bright sunlight may prevent the robot from seeing the meter’s needle. If that happens, the robot needs to be able to quickly change position autonomously.

Cloud skills

Skills that demand higher processing power run in the cloud due to their computation and memory requirements so as to extend a robot’s battery lifetime. These could include tasks that measure changes over time like thermal imaging or temperature monitoring.

Hybrid skills

Skills like gauge reading can either run in the cloud or on the robot depending on connectivity and resource availability.

Ultimately, this means that customers get instant value without having to know anything about robotics themselves. They can focus on their own core business and rely on our framework to carry out their computer vision or AI tasks. And, importantly for IT security and safety, the data stays in our ecosystem.

What’s next: Public Beta

We’ll be entering a public beta phase for the Skill Store later in the first quarter of 2022.

If you’re a developer and would like to participate, please get in touch via the form below.